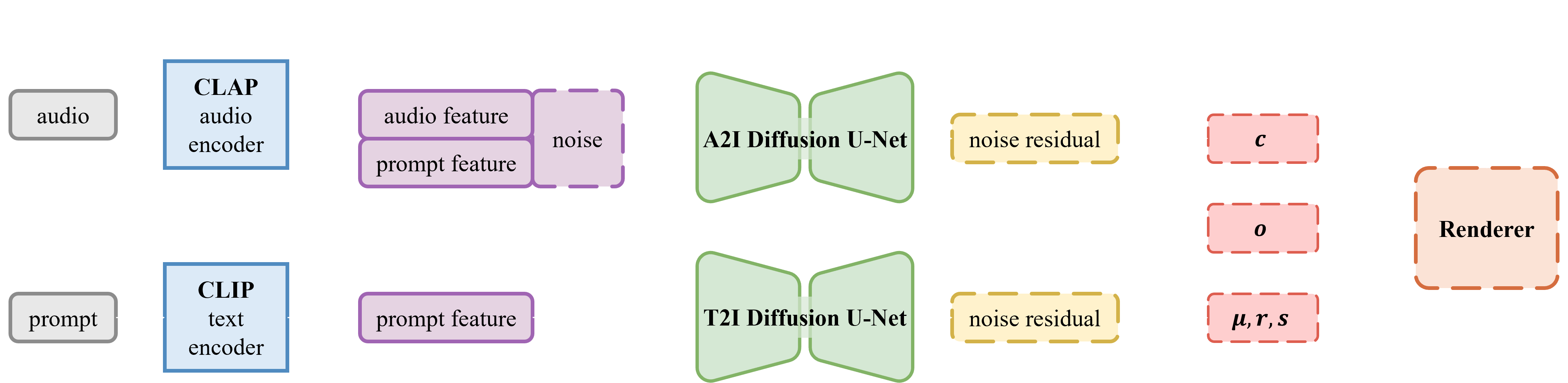

Method Overview

SFX-to-3D Generation Results

Audio: 🔊 (Fire Cracking)

Audio: 🔊 (Forest)

Audio: 🔊 (Underwater Bubbling)

Audio: 🔊 (Snow)

Our system is capable of audio-driven 3D mesh and texture generation using pretrained 2D diffusion models from only single audio file.

Ablation: Modality-Cross 3D Generation

Audio: 🔊 (Null)

Text: 💬 "A chair with fire crackling effect"

Audio: 🔊 (Null)

Text: 💬 "A chair with fire crackling effect"

Audio: 🔊 (Fire Cracking)

Text: 💬 "A Chair"

Audio: 🔊 (Fire Crackling)

Text: 💬 "A Chair"